Overfitting and Generalization of AI in Medical Imaging

If we are to trust AI to assist physicians in patient care, we need to understand the strengths and weaknesses of the technology. Clinicians need AI that is accurate and generalizable. While major strides have been made in accuracy, generalizability remains a challenge when implementing AI in the real world, and it makes it more difficult to deploy AI for patient care.

Overfitting is not unique to radiology but the implications for patient care and clinical decision making are high. It can be seen whenever there is limited data available to train the algorithm and/or the training data does not represent the conditions under which the model will be expected to perform.

What is overfitting?

Overfitting in AI describes models that perform very well on the training set but do poorly in practice. Essentially, they have trouble generalizing what they “learned” from the training set. This occurs because the model learns every aspect of the training set and therefore has internalized the irrelevant details (noise) that is present.

Overfitting is a problem that originates in the training phase of a model, and the reasons for overfitting can be divided primarily into data-level and model level issues. At a data level, inadequate training data and training data that is non-representative of the general patient population create most of the issues. Issues related to misclassifying images of similar classes (e.g., different pathologies that present similarly on imaging) also create problems in training data.

At the model level, overfitting can be mitigated by dropout, L1/L2 regularization, and batch normalization. The above methods limit the impact of any single node (aka ‘neuron’) in a model so that the feature it detects does not outweigh or overwhelm another feature. The ultimate impact of this is preventing over-reliance on any single imaging characteristic, thereby improving generalizability.

In AI models for radiology, image resolution can also create problems. One benefit of lower image resolution is that it translates to a reduced number of inputs, thereby reducing the number of parameters that must be optimized. This reduces the risk of overfitting. On the other hand, accurate diagnosis of subtle pathologies (e.g., pneumothoraces, lung nodules) can benefit from higher resolution images. Potential image resolution workarounds include labeling individual pixels that contain the pathology rather than providing whole-image labels.

Let’s look at some images that illustrate overfitting

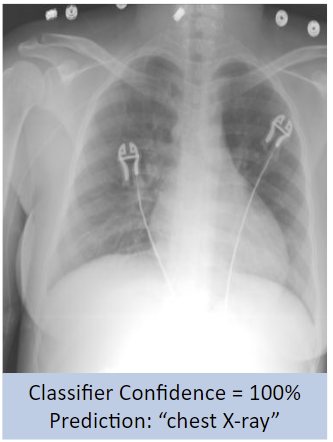

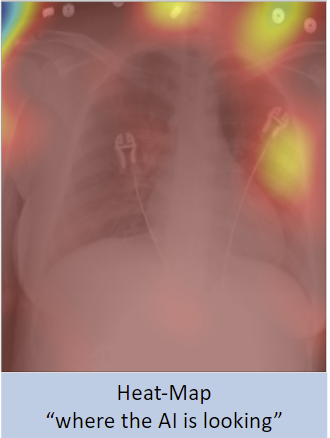

A binary classifier, such as one that can distinguish between chest radiographs (CXR) and abdominal radiographs (AbdXR) with 100% accuracy, sounds great at a surface level. However, an analysis of the heat-map seen below reveals that the AI model placed a lot of emphasis on EKG leads and gown buttons on a patient’s chest to produce the image label, rather than markers a radiologist would consider.

While the results are not inaccurate and the underlying methodology is not necessarily “wrong,” a radiologist would have used anatomical markers such as lungs, mediastinum, or osseous thoracic structures, not devices present in the image. How well would the model generalize if there were CXRs on the test set that lacked EKG leads, or there were AbdXRs with devices or gown buttons that appeared similar to EKG leads?

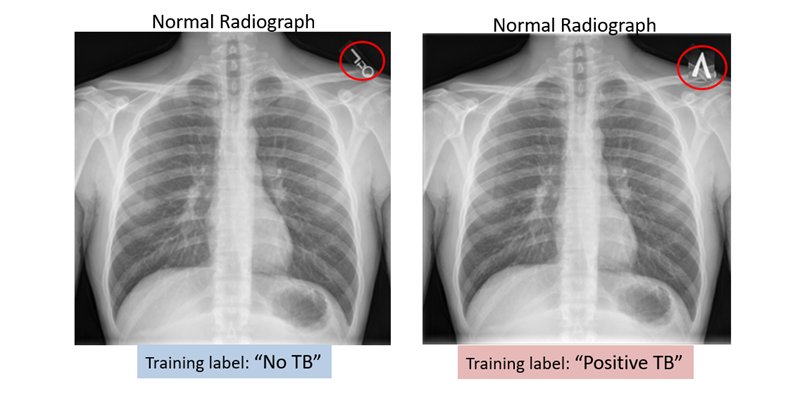

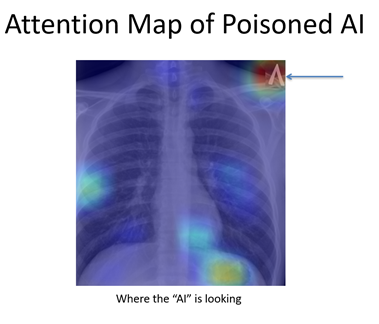

In an unpublished experiment, a TB model with an accuracy greater than 90% was re-trained (fine-tuned) using a small number (fewer than 10) normal chest radiographs. The only difference with the new data was the label in the top-right corner (see image below). This led the model to label cases with the inverted “V” as positive for TB even though the radiographs were normal. This “poisoned” model then learned to classify radiographs as having TB based on the label alone.

While this was a deliberate experiment, it raises the point that an AI system will use any information at its disposal to make predictions. While this could be a strength for some AI use cases, it also means that systems could be vulnerable to variations in image processing, image coverage, patient positioning, or image labels.

What to look out for with AI

As these examples demonstrate, an AI model is only as good as the data used to develop it. Some of the factors discussed in this article point towards a future where AI will augment rather than replace the work that is done by radiologists today.

The role of tomorrow’s radiologists will be to not only appropriately “prescribe” a model to an imaging study but to also promote patient safety by acting as gatekeepers of AI.

Rishi Gadepally, BS, MS2 at Sidney Kimmel Jefferson Medical College & Thomas Jefferson University Rishi.Gadepally@students.jefferson.edu

Paras Lakhani, MD, Associate Professor of Radiology at Sidney Kimmel Jefferson Medical College & Thomas Jefferson University Paras.Lakhani@jefferson.edu

Citations

Bluemke, D. A., Author AffiliationsFrom the Department of Radiology, JR, G., Et Al, SH, P., S,

S., KG, M., JA, D., DA, B., Garbin, C., Rigiroli, F., Claudio E. von Schacky, Carrino, J. A., Eche, T., Shur, J. D., Duron, L., Hayashi, D., Bartoli, A., Mann, R. M., … Mongan, J. (2019, December 31). Assessing Radiology Research on Artificial Intelligence: A brief guide for authors, reviewers, and readers-from the Radiology Editorial Board. Radiology. Retrieved March 23, 2022, from https://pubs.rsna.org/doi/full/10.1148/radiol.2019192515

DeGrave, A. J., Janizek, J. D., & Lee, S.-I. (2021, May 31). AI for radiographic COVID-19

detection selects shortcuts over signal. Nature News. Retrieved March 1, 2022, from https://www.nature.com/articles/s42256-021-00338-7

Eche, T., Schwartz, L. H., Mokrane, F.-Z., & Dercle, L. (2021, October 27). Toward

generalizability in the deployment of Artificial Intelligence in Radiology: Role of computation stress testing to overcome underspecification. Radiology. Artificial intelligence. Retrieved February 23, 2022, from https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8637230/

Geis, R. (n.d.). Drifting Away AI Machines. Data Science Institute DSI. Retrieved March 24,

2022, from https://www.acrdsi.org/DSIBlog/2021/05/12/14/47/Drifting-Away-AI-Machines

Lakhani, P., Gray, D. L., Pett, C. R., Nagy, P., & Shih, G. (2018, May 3). Hello world deep

learning in Medical Imaging - Journal of Digital Imaging. SpringerLink. Retrieved March 15, 2022, from https://link.springer.com/article/10.1007/s10278-018-0079-6

McNealis, N. (2020, April 30). A simple introduction to dropout regularization (with code!).

Medium. Retrieved March 30, 2022, from https://medium.com/analytics-vidhya/a-simple-introduction-to-dropout-regularization-with-code-5279489dda1e#:~:text=Dropout%3A%20a%20simple%20way%20to,Other%20Common%20Regularization%20Methods

Mutasa S;Sun S;Ha S(n.d.). Understanding Artificial Intelligence based radiology studies:

What is overfitting? Clinical imaging. Retrieved February 23, 2022, from https://pubmed.ncbi.nlm.nih.gov/32387803/

Sabottke, C. F., Author AffiliationsFrom the Department of Radiology, P, R., Al, E., J, I., X, W., L, Y., R, B., G, H.,

K, H., LN, S., ER, D. L., X, S., AB, R., NE, A., A, R.-R., M, A., A, S., B, M., … Lakhani, P. (2020, January 22). @Radiology_AI. Radiology: Artificial Intelligence. Retrieved March 1, 2022, from https://pubs.rsna.org/doi/full/10.1148/ryai.2019190015

Overfitting and Generalization of AI in Medical Imaging

-

You may also like

Key Takeaways from the Data Science SummitAugust 13, 2021 | Po-Hao (Howard) Chen, MD, MBAAs radiologists, we strive to deliver high-quality images for interpretation while maintaining patient safety, and to deliver accurate, concise reports that will inform patient care. We have improved image quality with advances in technology and attention to optimizing protocols. We have made a stronger commitment to patient safety, comfort, and satisfaction with research, communication, and education about contrast and radiation issues. But when it comes to radiology reports, little has changed over the past century.